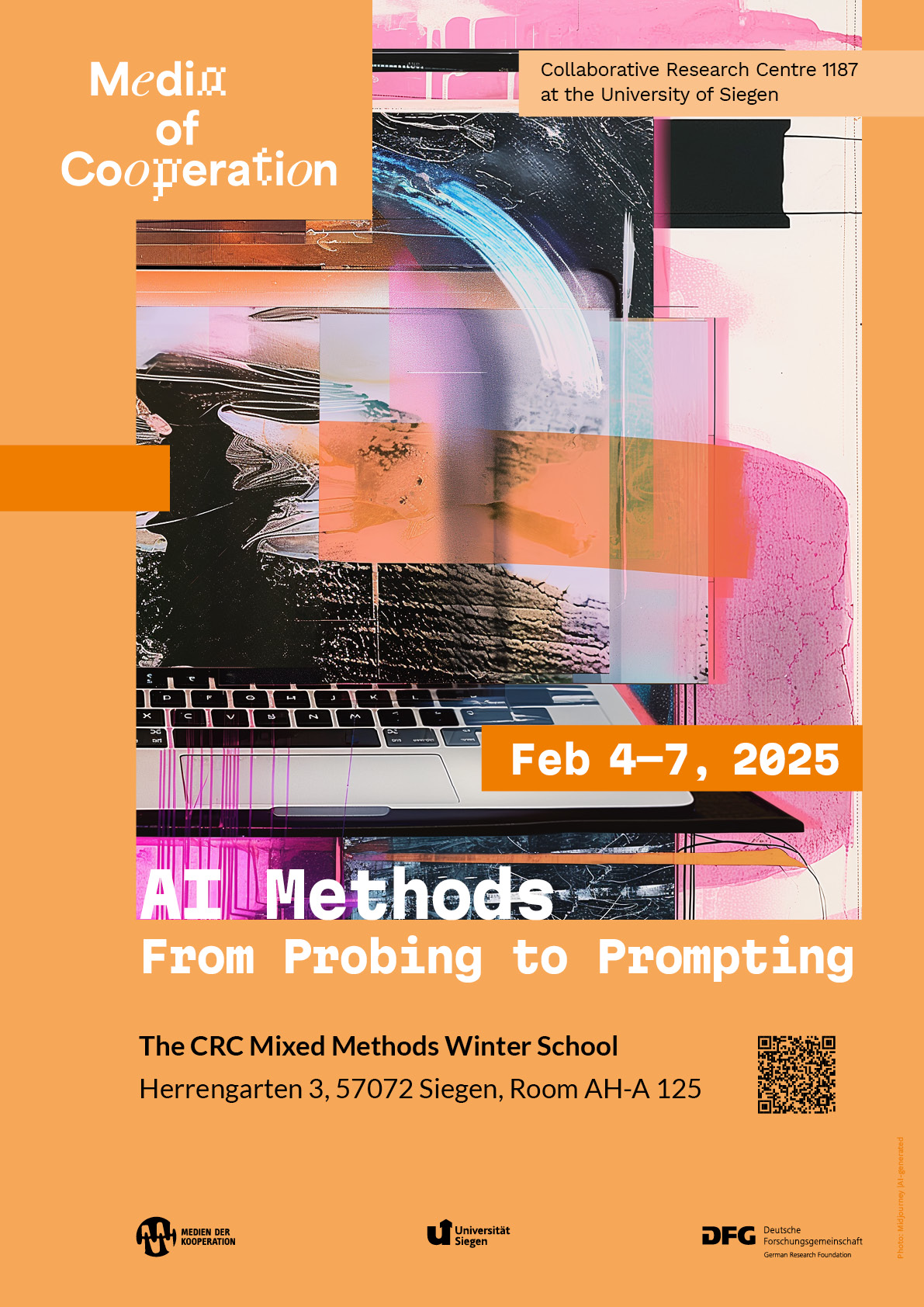

Call for Participations: Winter School on AI Methods

AI Methods: From Probing to Prompting, 4-7 February, 2025

The Collaborative Research Center 1187 “Media of Cooperation” organizes the one-week winter school at the University of Siegen and invites graduate students, postdoc researchers, and media studies scholars interested in the intersections of AI methods, digital visual methodologies, visual social media, and platforms. The Winter School aims to explore questions centering on the implications of AI methods for new forms of sense-making and human-machine co-creation. Please register via the registration form until December 15 2025.

About the Winter School

As artificial intelligence (AI) technologies rapidly evolve, the ways in which we perceive and process information are fundamentally changing. The shift from computational vision, recognition, and classification to generative AI lies at the core of today’s technological landscape, fueling societal debates across different areas—from open-source intelligence and election security to propaganda, art, activism, and storytelling.

Computer vision, a sophisticated agent of pattern recognition, emerged with the rise of machine learning, sparking critical debates around the fairness of image labelling and the deep-seated biases in training data. Today, models like Midjourney, Stable Diffusion, DALL-E, and more recently, Grok are not just recognizing—they are generating patterns, synthesizing multimodal data from websites, social media, and other online sources to produce oddly familiar and yet captivating results. This shift introduces significant ethical questions: How can we critically repurpose the outputs of AI models that are always rooted in platform infrastructures? Which methodological challenges and creative possibilities arise when the boundaries between context and scale become indistinct? Are patterns and biases all there is? And how about scaling down?

The one-week winter school at the University of Siegen organized by the Collaborative Research Center “Media of Cooperation” invites participants to explore these questions centering on the implications of AI methods for new forms of sense-making and human-machine co-creation. The winter school is practice-based and brings together conceptual inputs, workshops, and sprinted group projects around two collaborative methods: probing and prompting.

Probing involves repurposing AI systems to explore their underlying mechanisms. It is a method of critical interrogation—for example, using specific collections of images as inputs to reveal how contemporary computer vision models process these inputs and generate descriptions. Probing not only serves to problematize the hidden architectures of AI but also allows us to critically assess their different ‘ways of knowing’—how can alternative computer vision features such as web detection or text-in-image recognition help us contextualize and interpret visual data?

On the other hand, prompting refers to the practice of engaging GenAI models through input commands to generate multimodal content. Prompting emphasizes the participatory aspect of AI, framing it as a tool for human-machine co-creation, but it also shows the models’ limitations and inherent tensions. AI-generated creations captivate us, yet they also pose the risk of hallucination or what philosopher Harry Frankfurt might call “bullshit”— statements the models confidently present as facts, regardless of their detachment from reality.

The first day of the Winter School will be hybrid. Project group work will be taking place on site.

Program highlights

Participants will have the opportunity to explore and attune these methods to different research scenarios including tracing the spread of propaganda memes/deepfakes, analyzing AI-generated images, and ‘jailbreaking’ or prompting against platforms’ content policy restrictions. A blend of research practice and critical reflection, the winter school features

a keynote by Jill Walker Rettberg (University of Bergen) on “Qualitiative methods for analysing generative AI: Experiences with machine vision and AI storytelling”

two hands-on workshops on mixed techniques for probing and prompting facilitated by Carlo de Gaetano (Amsterdam University of Applied Sciences), Andrea Benedetti (Density Design, Politecnico di Milano), Elena Pilipets (University of Siegen), and Marloes Geboers (University of Amsterdam)

two project tracks intended to combine AI methods with qualitative approaches and ethical data storytelling.

Track 1 “Fabricating the People: Probing AI Detection for Audio-Visual Content in Turkish TikTok” led by Lena Teigeler and Duygu Karatas (both University of Siegen)

Track 2 “Jail(break)ing: Synthetic Imaginaries of ‘sensitive’ AI” led by Elena Pilipets (University of Siegen) and Marloes Geboers (University of Amsterdam)

Track I: Fabricating the People: Probing AI Detection for Audio-Visual Content in Turkish TikTok

Lena Teigeler & Duygu Karatas

Several brutal femicides in Türkiye in 2024 led to a wave of outrage, showing in protests both on the streets and on social media. The protesters demand the protection of women against male violence, measures against offenders and criticize the government under Recep Tayyip Erdoğan for not standing up for women’s rights, as demonstrated, for example, by Türkiye’s withdrawal from the Istanbul Convention in 2021. One of the cases leading to the protest was allegedly connected to the Turkish “manosphere” and online “incel” community. The manosphere is an informal online network of blogs, forums, and social media communities focused on men’s issues, often promoting views on masculinity, gender roles, and relationships. At the core of these groups often lie misogynistic, and anti-feminist views. Many groups foster toxic attitudes toward women and marginalized groups. Incels, short for „involuntary celibates,“ are one subgroup belonging to the broader manosphere, formed by men who feel unable to form romantic or sexual relationships despite wanting them, often blaming society or women for their frustrations.

The project investigates how the cases of femicide are discussed and negotiated in Turkish TikTok by protesters and within the manosphere and explores how these videos make use of generative AI. The use of AI in video creation can range from entire scene generation, over the creation of sounds or deepfaking, to editing and stylisation. The project takes a sample of TikToks associated with the recent wave of femicides as the starting point and makes use of AI methods for two purposes: 1) To detect the usage of generative AI within a sample of TikToks with the help of image labeling. This can range from fully-generated images, videos or sound, to the usage of tools and techniques used within the creation and editing process. We compare different models for detection purposes. 2) With the help of Web Detection, we trace the spread of videos and images across platform borders and content elements that are assembled or synthesized within TikToks.

The aim of the project is to create a cartography of AI based methods for the investigation of audio-visual content. It is part of the DFG-funded research project “Fabricating the People – negotiation of claims to representation in Turkish social media in the context of generative AI”.

Track II: Track 2 Jail(break)ing: Synthetic Imaginaries of ’sensitive‘ AI

Elena Pilipets & Marloes Geboers

The rapid evolution of AI technology is pushing the boundaries of ethical AI use. Newer models like Grok-2 diverge from traditional, more restrained approaches, raising concerns about biases, moderation, and societal impact. This track explores how three generative AI models—X’s Grok-2, Open AI’s GPT4o, and Microsoft’s Copilot—reimagine controversial content according to—or pushing against—the platforms‘ content policy restrictions. To better understand each model’s response to sensitive prompts, we use a derivative approach: starting with images as inputs, we generate stories around them that guide the creation of new, story-based image outputs. In the process, we employ iterative prompting that blends “jailbreaking”—eliciting responses the model would typically avoid—with “jailing,” or reinforcing platform-imposed constraints. Jail(break)ing, then, exposes the skewed imaginaries inscribed in the models‘ capacity to synthesize compliant outputs: The more iterations it takes to generate a new image the stronger the latent spaces of generative models come to the fore that lay bare the platforms‘ data-informed structures of reasoning.

Addressing the performative nature of automated perception, the track, facilitated by Elena Pilipets and Marloes Geboers, examines six image formations collected from social media, which then were used as prompts to explore six issues: war, memes, art, protest, porn, synthetics. In line with feminist approaches, we attend specifically to the hierarchies of power and (in)visibility perpetuated by GenAI, asking: Which synthetic imaginaries emerge from various issue contexts and what do these imaginaries reveal about the model’s ways of seeing? To which extent can we repurpose generative AI as a storytelling and tagging device? How do different models classify sensitive and ambiguous images (along the trajectories of content, aesthetics, and stance)?

Facilitators will combine situated digital methods with experimental data visualization techniques tapping into the generative capacities of different AI models. The fabrication and collective interpretation of data with particular attention to the transitions between inputs and outputs will guide our exploration throughout. Participants will learn how to:

- Conduct “keyword-in-context” analysis of AI-generated stories to identify patterns or “formulas” within issue-specific imaginaries (where, who/what, and how).

- Perform network analysis of AI-generated tags, where input keywords are tags for the original images and output keywords are tags for AI-regenerated images.

- Design prompts to generate canvases that synthesize vernaculars of different transformer models.

The project builds on our earlier work, developing ethnographic approaches to explore cross-model assemblages of algorithmic processes, training datasets, and latent spaces.

Registration

Please register via the form above until December 15. Your registration will be confirmed by December 20, 2024. Participation is limited to 20 people.

Venue

University of Siegen

Campus Herrengarten

Herrengarten 3

room: AH-A 125

57072 Siegen

Contact: Elena Pilipets